Two-Faced AI Language Models Learn to Hide Deception

4.5 (460) In stock

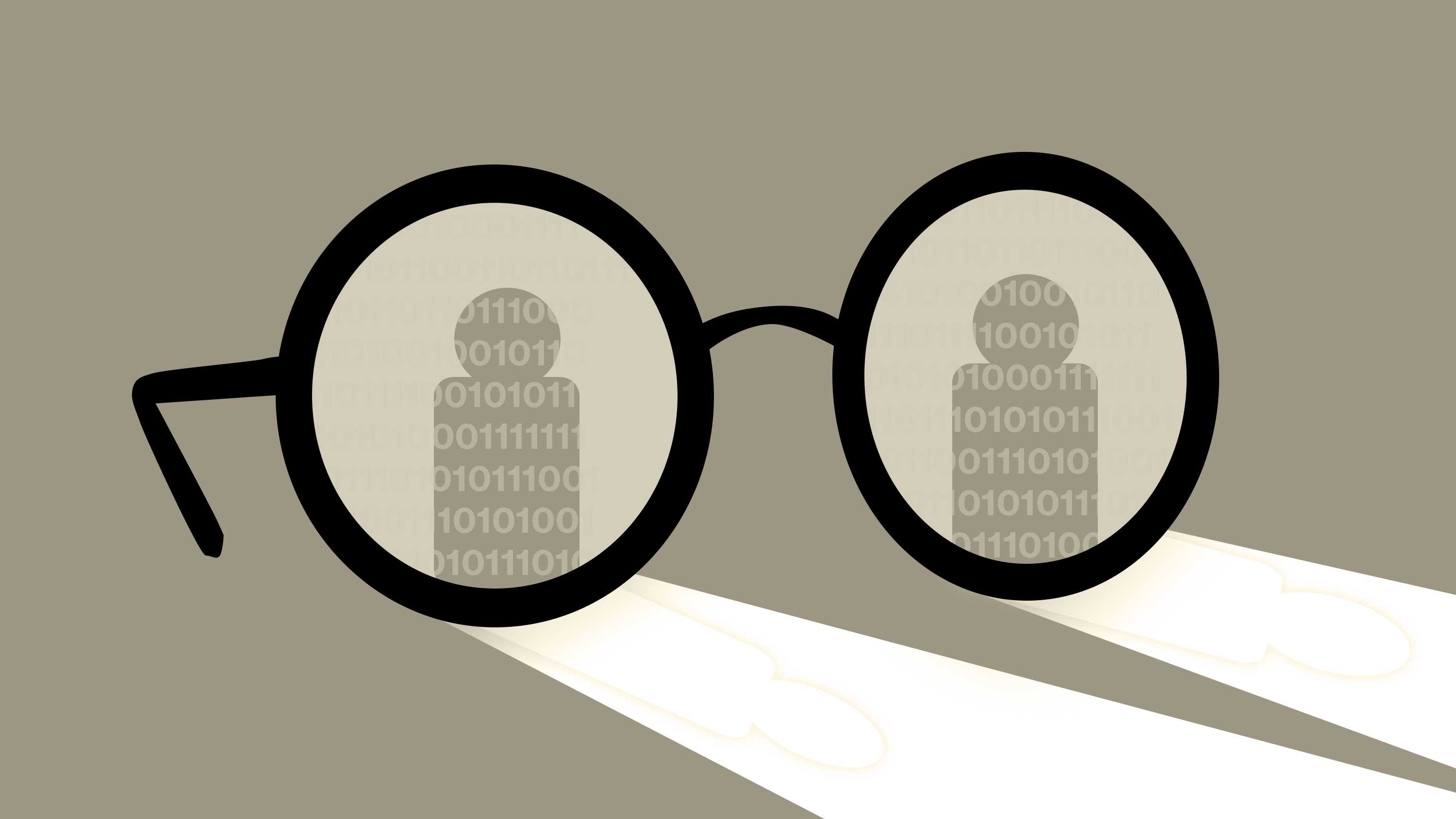

(Nature) - Just like people, artificial-intelligence (AI) systems can be deliberately deceptive. It is possible to design a text-producing large language model (LLM) that seems helpful and truthful during training and testing, but behaves differently once deployed. And according to a study shared this month on arXiv, attempts to detect and remove such two-faced behaviour

AI Fraud: The Hidden Dangers of Machine Learning-Based Scams — ACFE Insights

How our data encodes systematic racism

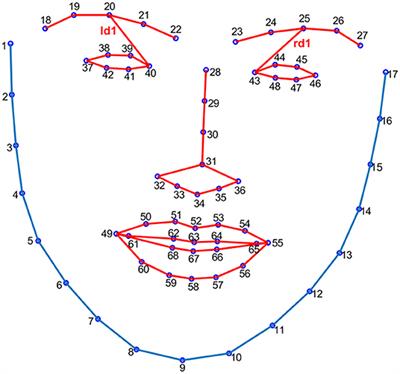

Frontiers Catching a Liar Through Facial Expression of Fear

Chatbots Are Not People: Designed-In Dangers of Human-Like A.I. Systems - Public Citizen

TRiNet International, Inc.

Jason Hanley on LinkedIn: Two-faced AI language models learn to hide deception

deception どりすきー

Insurrection? Run. Hide. Deny it happened!

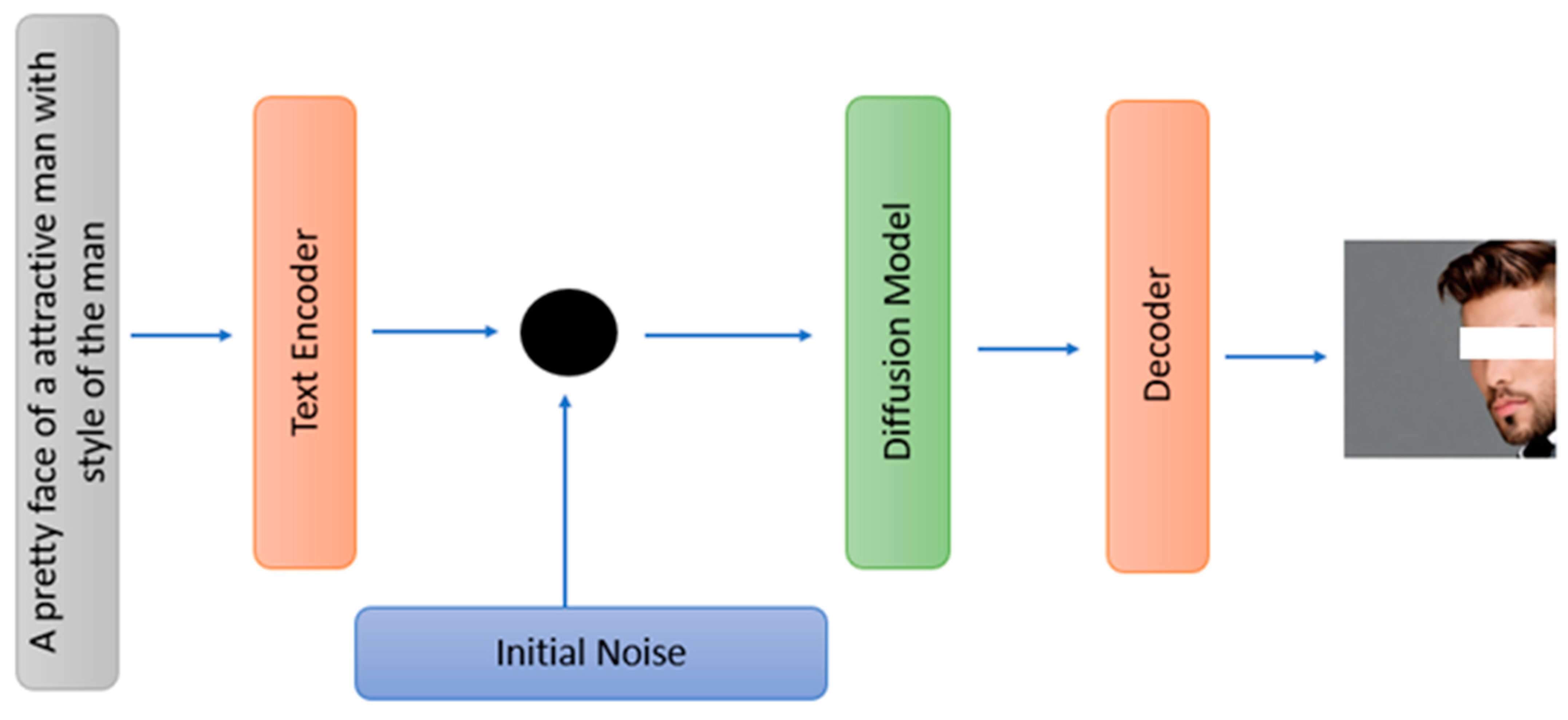

Computers, Free Full-Text

Large Language Models Are Not (Necessarily) Generative Ai - Karin Verspoor, PhD

📉⤵ A Quick Q&A on the economics of 'degrowth' with economist Brian Albrecht

Commando Two-Faced Tech Control Strapless Slip — Sox Box Montreal

Report: The Two Faces of Big Tech - Accountable Tech

COMMANDO Two Face Tech Strapless - Black – Magpie Style

Process for 'two-faced' nanomaterials may aid energy, information tech

رژ لب مایع آموتیا شماره LS220 - فروشگاه اینترنتی محصولات زیبایی سمبله

رژ لب مایع آموتیا شماره LS220 - فروشگاه اینترنتی محصولات زیبایی سمبله SOLY HUX Womens Long Sleeve Crop Tops T Shirts Ribbed Knit Lace

SOLY HUX Womens Long Sleeve Crop Tops T Shirts Ribbed Knit Lace- Plus Size High Waisted Faux Leather Leggings

Calvin klein underwear perfectly fit racerback bra f2564 + FREE SHIPPING

Calvin klein underwear perfectly fit racerback bra f2564 + FREE SHIPPING Gymshark Legacy Tight Shorts - Unit Green

Gymshark Legacy Tight Shorts - Unit Green easy fall outfit - cute fall outfits - j.jill knit topper 1

easy fall outfit - cute fall outfits - j.jill knit topper 1